Since March, the Algorithm Productions team has taken the opportunity to develop new virtual production and animation projects in lockdown, harnessing the creative capabilities of real-time graphics tool, Notch.

“There has been lots of excitement around virtual production in Unreal Engine with nDisplay and we’ve been working on demos to publish over the coming months,” Creative Director, Kev Freeney informed TPi.

He referred to similar work in Notch with a view to augmenting livestreams – exploring what a stage can be when it doesn’t need physical infrastructure. “We’ve been talking loads about why somebody watches a streamed music event – what they expect from it, how to augment in a meaningful way rather than just splashing VFX everywhere for the sake of it,” he commented. “Simultaneously, we’re experimenting with in-browser multi-user event spaces with WebGL and exploring other aspects of WebGL and various javascript libraries to see how we can leverage some of our existing skills in a web environment.”

One such lockdown project Freeney referred to is a recent collaboration on the music video for The Blizzards’ Pound the Pavement. “This was a response to us being in lockdown and not being able to shoot a more traditional live performance music video for the band,” he revealed. “It’s about people using technology to connect while in isolation. We wanted to show our audience that we can all still create beautiful and memorable moments by working together – even remotely.”

The concept involves two digital avatars falling in love with each other online. “During lockdown, so many of us were using technology to communicate and feel connected with our friends and family around the world – we wanted to expand this and develop it into a contemporary digital duet.

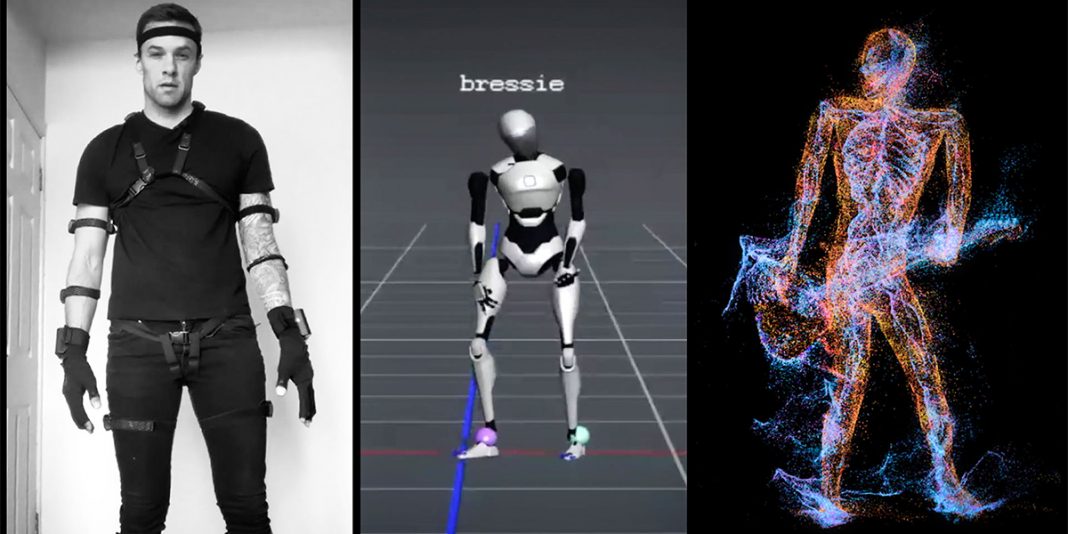

“The song is also about taking back control when you think you have lost it,” he underlined. “The lyrics project a message of hope, grit and determination to overcome the challenges we are all facing today.” The project marked the collective’s first experience with a Noitom mocap suit. “It was really enjoyable,” Freeney quipped. “It’s a great tool to use; we really pushed how far we could go with it with a very small budget. As we only had one suit, we had to practice and rehearse all the dance moves with our Choreographer, Janna Kemperman, from both characters’ perspectives.”

As the band was in isolation, Algorithm delivered a suit to each member of the band, controlling remotely from the Noitom App over TeamViewer and Zoom. “This was a healthy but rewarding challenge,” Freeney recalled. “We can’t wait to see what we can do with this kind of technology in a live event environment.”

For the Blizzards music video, the MoCap data was remotely recorded using a Noitom suit. “We used the data to animate some models in C4D, attaching instruments where necessary, and exported as animated FBX files to bring into Notch,” Freeney added. “We also animated the camera locations in C4D and linked that data in Notch too.”

Inside Notch, most of the video was achieved with a combination of mesh emitters and particle effectors. Freeney explained: “The big challenge there was keeping definition in the characters while retaining the ‘dots in a void’ particle look so we added a few more meshes with polygons clustered around muscle groups or important bones, so we could give particles in these areas slightly different behaviour.”

A nervous system model was rigged and animated with the same data, to add further definition to the characters. “This was created by tracing over anatomical drawings with the spline pen tool in C4D and putting the spline through a sweep nurbs operation,” he continued. “Adding collision with a ground plane proved a very effective way to suggest the shape of the space without showing it explicitly.”

Algorithm was initially founded in the BLOCK T studios in Smithfield. BLOCK T had been founded during the last great recession as a response to the vast amount of empty properties left vacant due to the collapse. Using these spaces for studios, galleries, event spaces and other creative uses, the company thrived at a time when others could not.

Unfortunately, as the marketability of these spaces came back, and the city put economic imperatives above all others, BLOCK T and so many other cultural and events spaces were not able to compete under those circumstances. “We were born in an environment that meant that we had to be adaptable in the face of uncertain conditions. Over the course of the next several years, our drive for creativity brought us through various challenges in various industries. From our beginnings in live music and events, to experiential marketing and ‘out of home’ installations, to the work we are doing now in immersive technology and mixed reality, adaptability has reinforced our creativity throughout.”

Part of the benefits of being a company that is forward-facing technologically speaking, Freeney explained, is that it puts the collective in a position to explore new ideas and solutions relatively quickly, adapting to whatever set of situations may arise. “This may come as a result of our beginnings in the live events industry where things can go haywire in a second,” he said. “We are always sharing new ideas and discussing different approaches to how we can use emerging technology to develop new ideas for ourselves, our collaborators and our clients.”

Asked why is it important to offer experiences for music fans during this trying time, Freeney was aware of the growing demand to recreate live events online, and while some of these have been breath-taking, Algorithm is keen to discover how new live experiences can be made with all the current restrictions in place. “For us, it’s not about reliving what has been, but endeavouring to create what will be,” he noted.

The response to the Blizzards project, according to Freeney, blew the team away. For something that was made remotely and in total isolation during a pandemic, he believed the joy in directing something that resonated with music fans was a big, but ultimately rewarding risk worth taking. “It was such an experimental project using technology we weren’t 100% familiar with, in the middle of a pandemic, where we’re all learning how to work remotely and can’t see each other in person.”

While half of the narrative in the piece was the actions of the characters in the virtual space, the other half involved the actions of the band in real life – dressing up in MoCap suits in their kitchens and bedrooms, recording data with TeamViewer. “Big ups to The Blizzards for that trust in us. This project also started a lot of internal discussions about what it means to use real-time rendering and simulation software in a film production environment – these are offline renders, but they may as well be live recordings,” he summed up. “This whole music video could be performed live, on stage, with a very high framerate, and that is cool.”

This article originally appeared in issue #254 of TPi, which you can read here.

Photos: Algorithm